Large databases, then and now

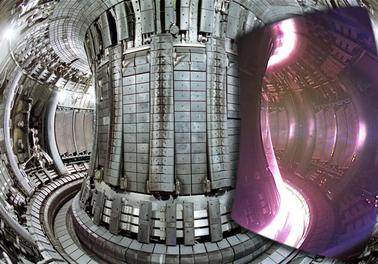

Back in 1998, I had a job at JET, the Joint European Torus, one of the world centres of research into nuclear fusion. The complex sprawled over most of the former RAF base at Culham, but the heart was the Machine: a huge torus of metal, machined to precisions of thousandths of an inch, which during experiments contained a few milligrammes of heavy hydrogen at a temperature ten times that of the Sun's core.

The JET Machine: red-hot nucleus-on-nucleus action

This machine pumped out what was at the time a daft amount of data: all the instrument readings and all the analyses of those readings came to around 120MB per run of the Machine (or "shot", as they were called). This was then fed into a wonderfully crockish home-brewed database system running on an IBM 390 mainframe. The system pre-dated the current ubiquity of relational databases, so it was actually a hierarchical database, something that most modern geeks haven't even heard of. The system operated in layers: recently requested data was held in RAM, data that hadn't been requested for a while lived on hard drives, data that hadn't been requested for longer than that lived on tape drives, and data that hadn't been requested for long enough was exiled to the Stygian depths of the tape store: your request for, say, deuterium-band emission spectra from May of 1983 would cause a small, red-eyed robot to trundle off into the tape store, fetch the tape with your data series, trundle back, and physically load it into the mainframe. I used to get lifts to work from the database administrator, and he hated the little robot: it was apparently always breaking down, and he'd then have to crawl in and fix it. "And the red eyes are really sinister! It looks like it's plotting against you."

The JET Joint Undertaking had been founded in 1978, and had been collecting data for most of the time since. This all added up to a lot of data. My friend apparently used to go to conferences for administrators of large databases just to laugh at what everyone else considered "large".

By performing a simple calculation, we can estimate the total amount of data in the database. When I was there, we were producing about 120MB per shot, and running about ten shots a day (this was running the Machine flat-out: we were about to be taken over by UKAEA, and everyone feared our budget would be slashed imminently). So we were producing around 1.2GB per day. If we'd been collecting data at that rate since day 1, we'd have collected 1.2 GB * 20 * 365 =~ 8.8TB, but that's an overestimate. Many things in computing follow Moore's Law, which states that [quantity] doubles in size every 18 months (this originally applied to the number of transistors that would fit onto a given area of silicon, but that drove a lot of other things). Assume JET's data acquisition followed Moore's Law, and set time t = 0 in the winter of 1998. Then data(t) = 1.2GB * e^kt, where k = ln 2/(18 * 30). We want to integrate data(t) between -20*365 and 0. The integral of 1.2 e^kt is of course (1.2 e^kt)/k, so we want 1.2 e^0/k - 1.2 e^(k * 20 * 365)/k, which is near-as-dammit equal to 1.2/k =~ 900GB (1 sig. fig.). So we get an estimate of 900 GB (which is to say nine hundred thousand megabytes) for the total amount of data collected by the JET project in twenty years.

As it happens, 900GB is only slightly larger than dreamstothesky's porn collection, which currently stands at around 880GB...

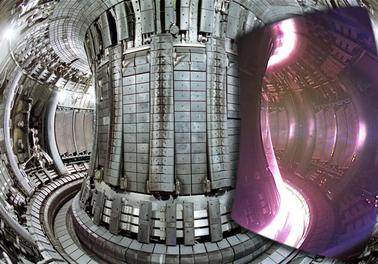

The JET Machine: red-hot nucleus-on-nucleus action

This machine pumped out what was at the time a daft amount of data: all the instrument readings and all the analyses of those readings came to around 120MB per run of the Machine (or "shot", as they were called). This was then fed into a wonderfully crockish home-brewed database system running on an IBM 390 mainframe. The system pre-dated the current ubiquity of relational databases, so it was actually a hierarchical database, something that most modern geeks haven't even heard of. The system operated in layers: recently requested data was held in RAM, data that hadn't been requested for a while lived on hard drives, data that hadn't been requested for longer than that lived on tape drives, and data that hadn't been requested for long enough was exiled to the Stygian depths of the tape store: your request for, say, deuterium-band emission spectra from May of 1983 would cause a small, red-eyed robot to trundle off into the tape store, fetch the tape with your data series, trundle back, and physically load it into the mainframe. I used to get lifts to work from the database administrator, and he hated the little robot: it was apparently always breaking down, and he'd then have to crawl in and fix it. "And the red eyes are really sinister! It looks like it's plotting against you."

The JET Joint Undertaking had been founded in 1978, and had been collecting data for most of the time since. This all added up to a lot of data. My friend apparently used to go to conferences for administrators of large databases just to laugh at what everyone else considered "large".

By performing a simple calculation, we can estimate the total amount of data in the database. When I was there, we were producing about 120MB per shot, and running about ten shots a day (this was running the Machine flat-out: we were about to be taken over by UKAEA, and everyone feared our budget would be slashed imminently). So we were producing around 1.2GB per day. If we'd been collecting data at that rate since day 1, we'd have collected 1.2 GB * 20 * 365 =~ 8.8TB, but that's an overestimate. Many things in computing follow Moore's Law, which states that [quantity] doubles in size every 18 months (this originally applied to the number of transistors that would fit onto a given area of silicon, but that drove a lot of other things). Assume JET's data acquisition followed Moore's Law, and set time t = 0 in the winter of 1998. Then data(t) = 1.2GB * e^kt, where k = ln 2/(18 * 30). We want to integrate data(t) between -20*365 and 0. The integral of 1.2 e^kt is of course (1.2 e^kt)/k, so we want 1.2 e^0/k - 1.2 e^(k * 20 * 365)/k, which is near-as-dammit equal to 1.2/k =~ 900GB (1 sig. fig.). So we get an estimate of 900 GB (which is to say nine hundred thousand megabytes) for the total amount of data collected by the JET project in twenty years.

As it happens, 900GB is only slightly larger than dreamstothesky's porn collection, which currently stands at around 880GB...