(no subject)

You can find their paper about quite advanced animation system planned to be published at SIGGRAPH this year and last year’s GDC talk (http://www.chrishecker.com/How_To_Animate_a_Character_You%27ve_Never_Seen_Before). Though, my interest was more in the area of critter’s mesh generation. I had the feeling it was somewhat inspired by all the sketch modeling papers of last decade. You can hardly create anything highly detailed and you are not given too much control over the process of mesh creation. But at the same time with all the basic operations you can create various kinds of characters and add details using provided templates.

Main thing is a body which is specified by the skeleton. Since all is done in real-time I expected body mesh to be some sort of NURBS surface based on skeleton spline:

Attachment of limbs could be handled on per-polygon basis as CSG union operator (and you don’t see really smooth blending between them - more on this later). But you can also see vertex “flickering” while adding new limbs, which in theory shouldn’t be there in case of parameterized curves. Also one can notice “aliasing” artifacts for very thin limbs (their contour looks like “step” function). Those are known problems of popular polygonization techniques working on uniform grid (e.g. marching cubes/tetrahedrons).

So my guess is some skeleton-based implicit surface is used here (not sure about metaballs, but simple quadratic field would be one of the fastest kernels to use along the spline). I guess blending between the limbs and the body doesn’t really look smooth because the field from the body and limbs is not simply summed (as it is done for different parts of the creature). They are probably using simplest CSG union which is a max function (it brings in C1-discontinuity which is even more noticeable because of bilinear interpolation of lighting attributes across the polygon). I think union operation is used instead of summation to prevent a known problem called “bulging”. “Bulging” leads to changes in the surface at locations where separate implicit primitives intersect. Probably they were trying to prevent uncontrollable growth of the resulting surface when a lot of limbs intersect:

Union of the field ( max(F,G) )Sum of the field (F +G)

This picture demonstrates that field from different primitives can dramatically change the resulting surface and even its topology (separate limbs can degenerate into one big blob).

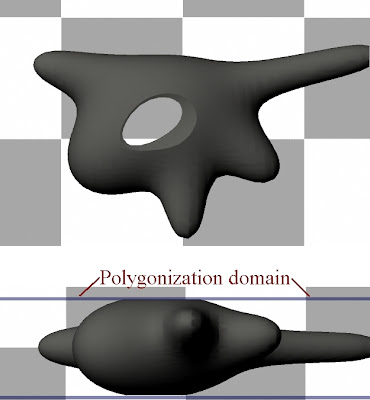

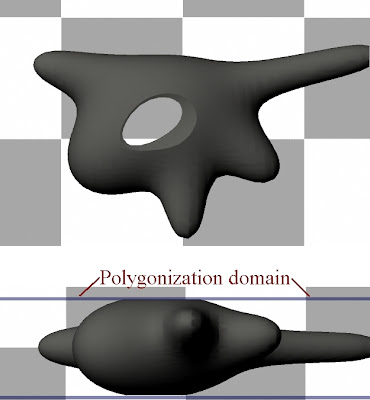

But more importantly this could lead to cases when field is outside the polygonization domain (i.e. regions where values of the field take the value used for generation of the surface are outside of domain) and holes appear in the resulting surface:

Union of fields ( max(F, G) )Sum of the fields (F + G)

I assume they’re using Marching Cubes (perhaps not the original one but with fixed topology disambiguities). Grid resolution looks like 64x64x64 (I couldn’t get any bigger in the demo):

On this picture you can see that some of the edges are not aligned with axes, this is because they are deformed by skinning. But it is seen that most of them have resulted from something similiar to regular polygonization.

After polygonization they use some not too sophisticated algorithm for skinning weights assignment. I suppose this procedure might rely on values of the field retrieved on previous step (they can estimate field contributions from separate bones in the vertices of the mesh and assign weights accordingly). Those vertices store position, normal, UV-s, tangents, indices and weights for 4 transformations (192 constant registers are used for joint matrices).

Some of the template parts you can choose from palette are most likely hand-made. Some of them (at least all the available heads) are split in a number of clusters containing equal number of triangles and are drawn in 15-20 DIPs (this is done 2 times for shadowmap and framebuffer). Though in walking mode 1 DIP is used to show them. I don’t really know why they needed it (maybe for non-uniform size tweaking or more complex composition). Those vertices have the same format as main body mesh:

Obviosly no blending between these surfaces just rendering the geometry before the main body part.

Claws, hands and feet seem to be another type of template. Those ones are drawn in 1 draw call and each vertex can have only 2 influencing joints (almost rigid skinning):

Another big thing is texturing. Whatever settings I changed it always remained 512x512 texture for diffuse and normal-map (maybe demo limitation). I’m not really familiar with techniques for automatic UV-unwrapping. Method used in “Spore” relies on surface curvature, using vertex normals to classify vertices (reminds of cube-mapping). In fact it tries to uniquely project mesh clusters on faces of the cube and use this information for splitting (I also think this information is used for texture generation, e.g. some patterns are only placed on the triangles facing “up”-direction):

Each time parameters of body or limbs are changed UV-atlas will be recalculated. All template geometry is also added to the texture where body pattern is specified, the more parts you add the tighter texture is packed and potentially more noticeable aliasing is (increasing number of triangles leaves less area for them in the texture):

In some cases there might be stitching artifacts so that distortions between triangles can be noticed.

When UV-atlas has been created, generation of actual diffuse and normal textures is started. Low-frequency components of diffuse are probably specified by color function defined across the volume. High-frequency details are mostly done by copying small patterns over each other across the texture (for both diffuse and normal-map), some noise with bilinear interpolation across the samples is added as well. It happens in a couple of iterations to let the user see results in near-real time:

First stepSecond step

Third stepFourth step

Last one

Number of iterations shown during texture generation most likely depends on the available processing power, so that some results keep changing about every 1-2 seconds. Finally normal is uploaded as well (during diffuse generation there is a 2x2 dummy normal map bind to the shader):

I wanted to see if they change texture resolution for cases when the number of triangles is pretty high. So it’s time for stress test (about 12000 triangles):

Texture resolution remained the same, while seamless UV-mapping now seems to be gone:

Also thin limbs are now aliased:

Sampling rate (in this case size of the smallest cube) is not enough to preserve details, thus polygonization algorithm has skipped some places where surface actually existed and introduced artifacts of linear interpolation in other places. Probably they will increase the smallest threshold for the limbs in the final version (I doubt resolution of polygonization grid will be increased).

This all is surely just a top of an iceberg. Tech behind this game looks really advanced. I would even say some of the solutions are totally revolutionary and are ahead of all the other recent games. It’s yet hard to figure out whether the game is going to be fun to play, but this Creature Creator Demo by itself is a great example of how creation can be more exciting than destruction (yeah, call me pussy).

Main thing is a body which is specified by the skeleton. Since all is done in real-time I expected body mesh to be some sort of NURBS surface based on skeleton spline:

Attachment of limbs could be handled on per-polygon basis as CSG union operator (and you don’t see really smooth blending between them - more on this later). But you can also see vertex “flickering” while adding new limbs, which in theory shouldn’t be there in case of parameterized curves. Also one can notice “aliasing” artifacts for very thin limbs (their contour looks like “step” function). Those are known problems of popular polygonization techniques working on uniform grid (e.g. marching cubes/tetrahedrons).

So my guess is some skeleton-based implicit surface is used here (not sure about metaballs, but simple quadratic field would be one of the fastest kernels to use along the spline). I guess blending between the limbs and the body doesn’t really look smooth because the field from the body and limbs is not simply summed (as it is done for different parts of the creature). They are probably using simplest CSG union which is a max function (it brings in C1-discontinuity which is even more noticeable because of bilinear interpolation of lighting attributes across the polygon). I think union operation is used instead of summation to prevent a known problem called “bulging”. “Bulging” leads to changes in the surface at locations where separate implicit primitives intersect. Probably they were trying to prevent uncontrollable growth of the resulting surface when a lot of limbs intersect:

Union of the field ( max(F,G) )Sum of the field (F +G)

This picture demonstrates that field from different primitives can dramatically change the resulting surface and even its topology (separate limbs can degenerate into one big blob).

But more importantly this could lead to cases when field is outside the polygonization domain (i.e. regions where values of the field take the value used for generation of the surface are outside of domain) and holes appear in the resulting surface:

Union of fields ( max(F, G) )Sum of the fields (F + G)

I assume they’re using Marching Cubes (perhaps not the original one but with fixed topology disambiguities). Grid resolution looks like 64x64x64 (I couldn’t get any bigger in the demo):

On this picture you can see that some of the edges are not aligned with axes, this is because they are deformed by skinning. But it is seen that most of them have resulted from something similiar to regular polygonization.

After polygonization they use some not too sophisticated algorithm for skinning weights assignment. I suppose this procedure might rely on values of the field retrieved on previous step (they can estimate field contributions from separate bones in the vertices of the mesh and assign weights accordingly). Those vertices store position, normal, UV-s, tangents, indices and weights for 4 transformations (192 constant registers are used for joint matrices).

Some of the template parts you can choose from palette are most likely hand-made. Some of them (at least all the available heads) are split in a number of clusters containing equal number of triangles and are drawn in 15-20 DIPs (this is done 2 times for shadowmap and framebuffer). Though in walking mode 1 DIP is used to show them. I don’t really know why they needed it (maybe for non-uniform size tweaking or more complex composition). Those vertices have the same format as main body mesh:

Obviosly no blending between these surfaces just rendering the geometry before the main body part.

Claws, hands and feet seem to be another type of template. Those ones are drawn in 1 draw call and each vertex can have only 2 influencing joints (almost rigid skinning):

Another big thing is texturing. Whatever settings I changed it always remained 512x512 texture for diffuse and normal-map (maybe demo limitation). I’m not really familiar with techniques for automatic UV-unwrapping. Method used in “Spore” relies on surface curvature, using vertex normals to classify vertices (reminds of cube-mapping). In fact it tries to uniquely project mesh clusters on faces of the cube and use this information for splitting (I also think this information is used for texture generation, e.g. some patterns are only placed on the triangles facing “up”-direction):

Each time parameters of body or limbs are changed UV-atlas will be recalculated. All template geometry is also added to the texture where body pattern is specified, the more parts you add the tighter texture is packed and potentially more noticeable aliasing is (increasing number of triangles leaves less area for them in the texture):

In some cases there might be stitching artifacts so that distortions between triangles can be noticed.

When UV-atlas has been created, generation of actual diffuse and normal textures is started. Low-frequency components of diffuse are probably specified by color function defined across the volume. High-frequency details are mostly done by copying small patterns over each other across the texture (for both diffuse and normal-map), some noise with bilinear interpolation across the samples is added as well. It happens in a couple of iterations to let the user see results in near-real time:

First stepSecond step

Third stepFourth step

Last one

Number of iterations shown during texture generation most likely depends on the available processing power, so that some results keep changing about every 1-2 seconds. Finally normal is uploaded as well (during diffuse generation there is a 2x2 dummy normal map bind to the shader):

I wanted to see if they change texture resolution for cases when the number of triangles is pretty high. So it’s time for stress test (about 12000 triangles):

Texture resolution remained the same, while seamless UV-mapping now seems to be gone:

Also thin limbs are now aliased:

Sampling rate (in this case size of the smallest cube) is not enough to preserve details, thus polygonization algorithm has skipped some places where surface actually existed and introduced artifacts of linear interpolation in other places. Probably they will increase the smallest threshold for the limbs in the final version (I doubt resolution of polygonization grid will be increased).

This all is surely just a top of an iceberg. Tech behind this game looks really advanced. I would even say some of the solutions are totally revolutionary and are ahead of all the other recent games. It’s yet hard to figure out whether the game is going to be fun to play, but this Creature Creator Demo by itself is a great example of how creation can be more exciting than destruction (yeah, call me pussy).